AI & Jobs

Yale came out with a new study about the impact of AI on the labor market. What does it get right and what are its limitations?

TL;DR

Yale’s new study claims AI hasn’t disrupted U.S. jobs.

But that stability likely reflects measurement failure, not economic calm.

The study relies on outdated job categories that miss how AI alters tasks within roles.

Yale’s supporting data, from OpenAI and Anthropic, conflict and cover only fragments of the economy.

The real issue isn’t that AI hasn’t changed work; it’s that our tools can’t see the change.

Three years into the age of ChatGPT, the labor market looks boringly normal. Employment is high, unemployment is low, and the Bureau of Labor Statistics’ categories have barely budged. If you read the new study by Yale University’s Budget Lab—Evaluating the Impact of AI on the Labor Market—you’d think that artificial intelligence has been all hype and no displacement.

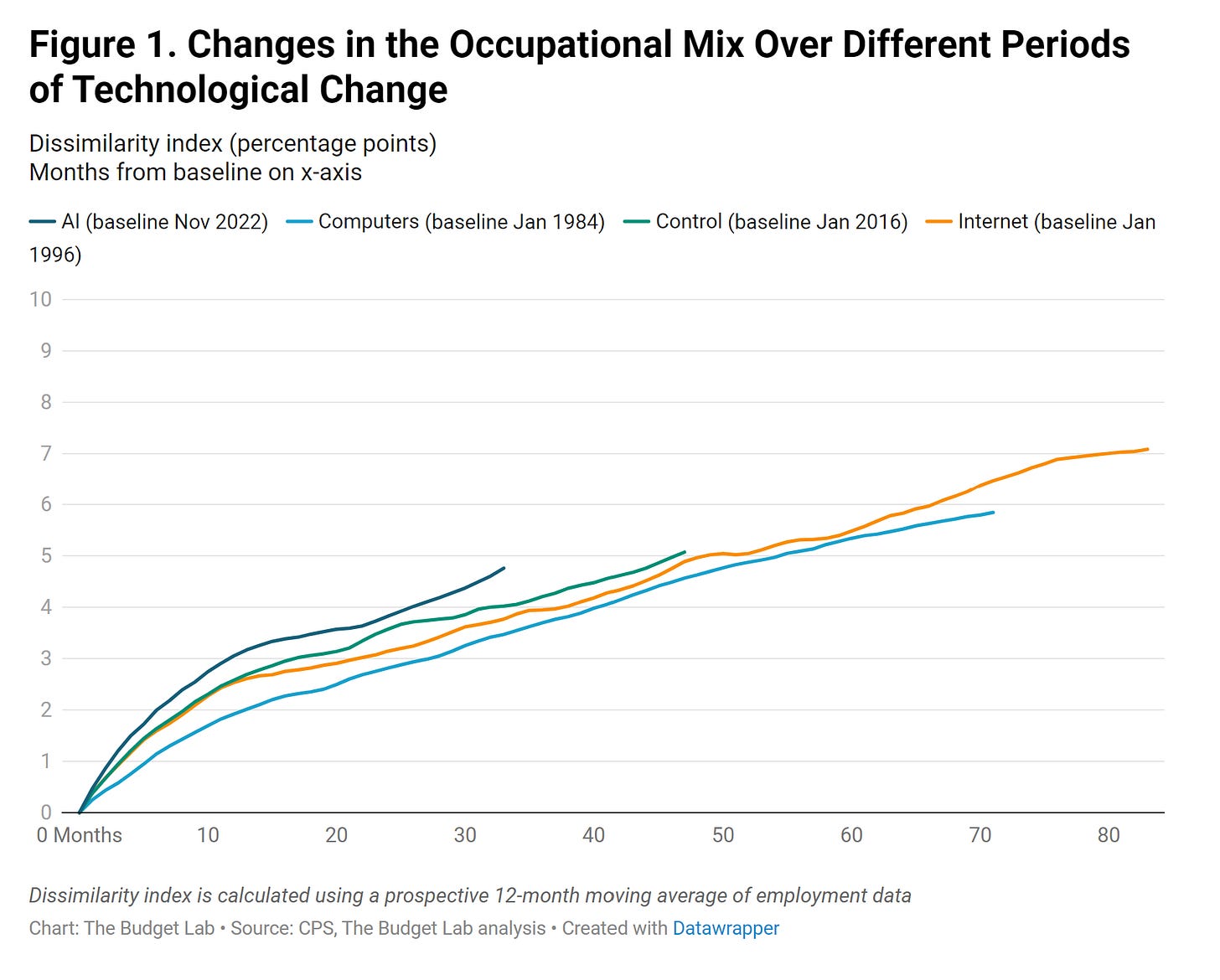

The Yale team took a data-first approach. They asked whether the composition of U.S. jobs has shifted faster since late 2022 than in previous waves of technological disruption, such as the PC or internet eras. They built an occupational dissimilarity index from Current Population Survey (CPS) data and compared the mix of jobs month-by-month since ChatGPT’s debut. The finding: yes, the job mix is changing slightly faster, but only marginally. Nothing looks like a major break in historical trend.

Then they overlaid two additional data sources. One from OpenAI, estimating how exposed different jobs are to automation by large language models. Another from Anthropic, measuring actual task-level usage of its Claude chatbot. Both showed remarkable stability. Jobs most at risk aren’t disappearing, and unemployment hasn’t risen in highly exposed occupations. By every measurable indicator, AI has yet to leave a macroeconomic footprint.

And, indeed, that’s the conclusion of VC and political operative David Sacks1:

If only the story were that simple.

The comforting illusion of smooth curves

The study’s central metric, the “dissimilarity index,” measures how different today’s occupational composition is from the baseline of November 2022. It’s clever and intuitive: if AI were destroying or creating jobs en masse, the occupational mix would lurch. Instead, it has drifted by roughly a single percentage point more than during the internet boom. The labor market, in this view, is adapting smoothly.

But the calm is partly statistical illusion. The CPS categories used to construct this index are crude instruments. Software developers and data scientists are still lumped into broad occupational bins designed decades ago. A law firm that replaces half its paralegals with GPT-4 but doubles output per remaining worker will show no change in occupation share. A marketing department that adds prompt engineering duties to existing staff still counts as “marketing specialists”. The underlying work can transform while the occupational label stands still.

Worse, CPS data misses the periphery. That is where we find the freelancers, contractors and micro-entrepreneurs, who are often the first to feel technological change. AI affects tasks long before it affects titles, but the Yale measure can only see the latter. It’s like using census data to detect the arrival of the internet: by the time it shows up in the occupational mix, the real action is long past.

The tyranny of short timescales

An additional problem with Yale’s study is that its 33-month window—roughly from ChatGPT’s launch to mid-2025—is too short to capture the lag between adoption and adjustment. It captures the fever of the AI boom but not all of its effects. Organizational change operates on slower, lumpier rhythms: budgets refresh annually, labor contracts last years, and new workflows take quarters to standardize2.

If firms are experimenting with copilots now, layoffs and job redesigns might not show up until 2026-27. By analogy, the iPhone launched in 2007; measurable productivity effects didn’t appear until the mid-2010s. The absence of disruption today says nothing about whether it’s coming. It just reflects inertia.

Exposure isn’t impact

Additionally, the report relies heavily on two proxy datasets: OpenAI’s exposure scores and Anthropic’s usage logs. Each is ingenious and fatally limited.

OpenAI’s exposure metric is theoretical: it estimates which occupations could, in principle, see a 50% reduction in task completion time from GPT-4. It’s a model of potential substitution, not observed behavior. Many exposed sectors, like clerical support, have barely adopted the tools, while others like software and media, are already saturated. Treating exposure as impact assumes adoption is homogenous and instantaneous, which it never is.

Anthropic’s data are the opposite: empirical but skewed. Usage is drawn from people actually talking to Claude. That population over-indexes on developers, writers, and tech workers. It omits most enterprise API calls, internal copilots, or integrated software workflows, all of which are where generative AI is spreading fastest. From that narrow slice, the study infers that AI usage is stable. But that stability might simply be a property of Anthropic’s user base, not of the economy.

The two datasets also disagree. OpenAI finds large swaths of cognitive work theoretically automatable; Anthropic sees most usage concentrated in coding and writing. Their correlation is weak, and Yale acknowledges this, but still treats both as reasonable lenses on AI’s labor impact. The risk is circular logic: using incomplete measures to confirm that nothing is changing.

The “no acceleration” fallacy

A subtler flaw lies in the study’s causal logic. The authors argue that because the rate of occupational churn hasn’t accelerated, AI isn’t driving labor-market change. But that presumes the effects of AI would show up as accelerated churn rather than as offsetting shifts.

Imagine AI doubles productivity for accountants while creating a wave of new AI-related compliance roles. The mix of occupations might stay stable—still “accountants and auditors”—even as the underlying skill composition, wages, and career trajectories mutate. A stable macro index can mask enormous micro-level churn.

Similarly, AI’s productivity effects could reallocate value rather than headcount. Ten workers doing twice as much output looks stable to the CPS but transformational to the firm. The Yale study implicitly assumes that structural change must manifest as compositional change. That’s the fallacy.

When the map hides the terrain

The authors repeatedly note that better data are needed: richer usage logs, standardized reporting from AI vendors, finer-grained occupation codes. In other words, the instruments are not yet tuned to the phenomenon they’re meant to measure.

This is a familiar dynamic in technological history. Early in the industrial revolution, productivity statistics barely moved, even as factories multiplied, because national accounts weren’t designed to capture capital intensity or mechanization. The Yale study may be documenting the same effect for AI.

It also shows how dependent our macro conclusions have become on the private data of a handful of firms. OpenAI and Anthropic are not neutral measurement agencies; their exposure and usage datasets are proprietary snapshots designed for internal research. Yet they’ve become the empirical foundation of academic labor analysis.

What the study gets right

For all these weaknesses, the Yale report performs an essential service. It reins in the apocalyptic rhetoric that every chatbot update equals mass unemployment. The historical comparison is a healthy reminder that even revolutionary technologies take time to diffuse. The world didn’t digitize overnight in 1996, and it won’t auto-generate itself in 2025.

The report also helps disentangle hype from signal. Many sectors showing large AI exposure (media, finance, professional services) already had high job churn before ChatGPT. The authors are correct to note that attributing every wiggle in the data to AI is sloppy. Their caution is empirically justified; their inference, less so.

The real question: where does AI hide?

If we accept that the aggregate data are too coarse, the next step is to look below the surface. The earliest labor effects of generative AI may appear not as layoffs but as scope compression: fewer analysts per project, fewer associates per partner, fewer writers per newsroom. Those are micro-productivity shifts invisible to occupational data but consequential for wages, career ladders, and entry-level opportunity.

Similarly, AI may be triggering reallocation across firms rather than sectors. A hundred small startups automating back-office work can offset the headcount expansion of a few large incumbents, leaving net employment unchanged while hollowing out the middle of the market. Again: stability in aggregate can coexist with turmoil underneath.

The next frontier: measuring the invisible

What the Yale study really reveals is a measurement crisis. We lack the telemetry to observe digital labor transformation in real time. If AI diffusion continues through SaaS layers, API calls, and embedded copilots, the relevant data will sit inside corporate dashboards, not government surveys. Without mandatory or voluntary disclosure from AI vendors, public researchers are flying blind.

The Budget Lab hints at this in its conclusion, calling for comprehensive, privacy-protected usage data from all major AI labs. That’s exactly right. Without open telemetry, the debate over AI’s labor impact will remain a contest between anecdotes and lagging indicators.

The bottom line

Yale’s data show that the U.S. labor market is stable for now. But stability is not stasis. It may be the quiet before adaptation, or the artifact of crude instruments applied to a fast-moving system.

If you widen the aperture from occupations to tasks, or from jobs to workflows, the world looks more fluid. AI is already rewiring what work feels like: fewer keystrokes, faster drafts, leaner teams. Those shifts will take years to surface in official data, and by the time they do, the real transformation will already be old news.

The lesson from Yale’s study is not that AI hasn’t changed the labor market. It’s that our measurement systems haven’t caught up to the world we’ve built.

If you enjoy this newsletter, consider sharing it with a colleague.

I’m always happy to receive comments, questions, and pushback. If you want to connect with me directly, you can:

follow me on Twitter,

connect with me on LinkedIn, or

send an email to dave [at] davefriedman dot co. (Not .com!)

This is not a political newsletter, so I will share the political valence of Sacks’ tweet in this footnote. Aside from being a venture capitalist, David Sacks is also the Trump administration’s AI and Crypto Czar (here’s his official White House Twitter account). The Trump administration, and, by extension, Sacks, has a vested interest in arguing that AI has not displaced jobs. One can reasonably expect the specter of AI-induced job losses to be a big issue in the upcoming midterm elections. And the results of the midterms will affect Trump’s ability to maneuver in the latter half of his second term.

Really insightful piece, Dave. I recognise a lot of what you describe, especially how AI doesn’t just replace tasks, but reshapes entire layers of the labour market. That part is essential.

In my own recent post, I reach a similar conclusion but from a broader angle: once companies can generate value without people, the real question becomes how we sustain welfare, democracy, and social stability when the tax base erodes and capital flows upward.

It’s the same pattern you highlight: AI strengthens whatever system it enters. Strong institutions absorb the shock and weak ones amplify it…

Your analysis of winners, losers and structural effects adds an important dimension to this conversation. It’s fits hand-in-glove with the societal perspective I explore.

If anione wants to read the wider systemic angle, here’s my piece

—> https://open.substack.com/pub/saraeson/p/ai-i-and-the-wolf-we-feed-d8c?r=1t83gn&utm_medium=ios

Translation is essentially dead as an industry, it was not great for a while but it's gone now. Source: half my household income evaporated two years ago.